It’s never been easier to write your own advertising campaign. Just get ChatGPT to generate the copy, use ElevenLabs to convert that text to speech, ask Suno to score an original song for the soundtrack and, if you’re feeling energetic, ask Midjourney or DALL·E to create an on-brand image of happy (but completely imaginary) customers … and you’re done.

Will the content be good? It’s hard to say. But that whole process will be about half an hour’s work, with all of the heavy lifting done for free by online generative AI (GenAI) while you’re off having a coffee.

AI technology has matured and gone mainstream, and it’s now freely and widely available. Oh, and full disclosure – while the article you’re reading was written by a human, we did ask Grammarly (a free AI service) to check for typos, grammar and spelling before it went to the copy editor’s desk.

GenAI is massive. According to Salesforce research, about 49% of surveyed people have used the technology, with more than one-third using it daily to assist with anything from customer support to internal communication to writing source code.

‘This data shows just how quickly generative AI usage has taken off in less than a year,’ says Clara Shih, CEO of Salesforce AI. ‘In my career, I’ve never seen a technology get adopted this fast. Now, for AI to truly transform how people live and work, organisations must develop AI that is rooted in trust, and easily accessible for everyone to do more enjoyable, productive work.’

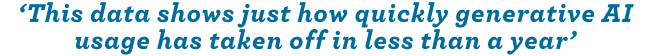

That’s already happening. A new survey of 100 SA businesses by World Wide Worx found that 11% officially use GenAI, while 24% use it unofficially and 10% do both. Just more than half of businesses using the technology said that they ‘only dabble with public services’, such as ChatGPT or Microsoft Copilot.

For chief technology officers and IT leaders, those words – ‘unofficially’ and ‘just dabbling’ – represent a growing crisis. Employees are increasingly using AI without the business sanctioning it or, for that matter, even knowing about it. This is called ‘shadow AI’, and it’s a huge problem, as Zamani Ngidi, senior client manager for cyber solutions at Aon South Africa, explains.

‘Pockets of experimentation and innovation with these new technologies are sometimes occurring outside of established data governance and cybersecurity practices, in what is coined “shadow AI”, limiting the visibility of an enlarged digital attack surface,’ he says. ‘As a result, actionable analytics are more critical than ever and will continue to be the cornerstone of informed decision-making in a rapidly evolving technological landscape.’

Vladislav Tushkanov, machine learning technology group manager at Kaspersky, elaborates. ‘GenAI tools promise better productivity as well as helping employees with most mundane and routine tasks, possibly bringing better job satisfaction. That’s why many people start using them even without proper authorisation from the employer, and why employers are contemplating proper integration of such tools in their business processes.’

Tushkanov says that Kaspersky – like most cybersecurity firms – has been using AI and machine learning algorithms to better protect its customers for close to 20 years. ‘We employ various AI models to detect threats and continuously research AI vulnerabilities to make our technologies more resilient,’ he says. ‘We also actively study how adversaries are using AI to provide protection against emerging threats. For example, in 2023, the Kaspersky Digital Footprint Intelligence service discovered nearly 3 000 posts on the dark web discussing the use of ChatGPT for illegal purposes or talking about tools that rely on AI technologies. We also found an additional 3 000 posts about stolen ChatGPT accounts and services offering their automated creation.’

There’s also the question of what kind of information is shared with the GenAI tool, and how that information is used. According to ChatGPT’s terms of use (last updated on 31 January 2024), the user retains ownership rights to the input, while OpenAI owns the output. It does, however, ‘assign to you all our right, title and interest, if any, in and to output’.

But then, just two paragraphs down, it warns that ‘we may use content to provide, maintain, develop and improve our services, comply with applicable law, enforce our terms and policies, and keep our services safe’.

What about other AI models? ‘When we get outside of the realm of OpenAI and Google, there are going to be other tools that pop up,’ Brian Vecci, CTO at cloud security firm Varonis, warned in an interview with CSO. ‘There are going to be AI tools out there that will do something interesting but are not controlled by OpenAI or Google, which presumably have much more incentive to be held accountable and treat data with care.’

Those second- and third-tier AI developers could be fronts for hacking groups; could profit in selling confidential company data; or might simply lack the cybersecurity protections that the big players have, he said. ‘There’s some version of an LLM [large language model] tool that’s similar to ChatGPT and is free and fast and controlled by who knows who. Your employees are using it, and they’re forking over source codes and financial statements, and that could be a much higher risk.’

Tushkanov agrees. He says one of the biggest cybersecurity threats emerging from employees using AI tools is data leakage. ‘Also, employees can get and act upon wrong information as a result of “hallucinations” – the situation when LLMs present false information in a confident way,’ he says.

‘A related issue might be insecure code produced by AI code assistants and used without due review. Another issue is LLM-specific vulnerabilities, such as prompt injections, when malicious actors embed hidden instructions within websites and online documents, which can then be picked up by LLM-based systems, potentially influencing search results or chatbot responses.’

Leaked information is only a problem if the information is sensitive. And while the classic ChatGPT request ‘write a limerick in the style of Eminem’ isn’t a problem, ‘please summarise this business strategy’ very much is.

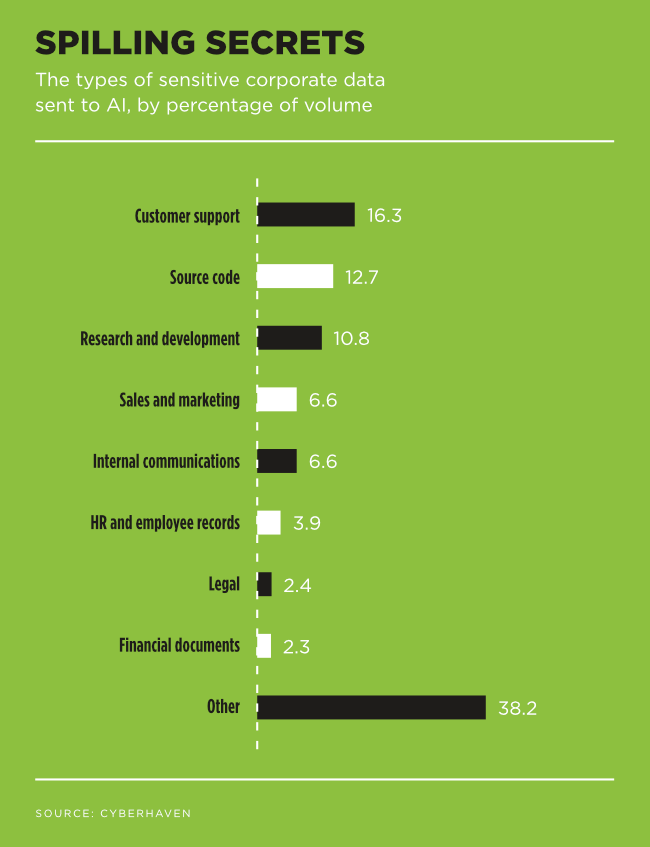

Cyberhaven’s 2024 AI Adoption and Risk report found that in March 2024, 27.4% of the corporate data that employees plugged into AI tools was sensitive, up from 10.7% in 2023. The variety of data has also grown. According to the report, customer support data made up 16.3% of sensitive data shared with AI, while source code comprised another 12.7%, research and development material 10.8%, and unreleased marketing material 6.6%.

The same Cyberhaven report, which was based on the AI usage patterns of 3 million workers, found that more than 94% of workplace use of Gemini and Bard, the Google AIs, are from non-corporate accounts.

You can see the problem. The solution, Tushkanov says, is to do a proper risk assessment. ‘Try to understand which parts of the daily business routine can be automated with GenAI tools without bringing additional risks, and where the data that is processed is either confidential or its processing is regulated by the local laws.

‘When relevant scenarios are established, the business can go from sporadic use of LLM services to a centralised approach, where the service is provided via an enterprise account with a cloud provider, with necessary safeguards – such as implementing monitoring for potential personally identifiable information in messages – and oversight, ie logging,’ he adds.

‘Combining the understanding of what data can be processed and the policies of the service provider, the business can start to educate their employees about the acceptable use of GenAI and the proper company-managed ways to access them, while maintaining control and observability.’

In January 2024 Accenture released a report stating that ‘generative AI has become an extraordinary force in enabling reinvention and accelerating organisations’ progress towards a new performance frontier. Some understand this potential and are taking action. We’re seeing this among reinventors, and also among a group of transformers that we expect to leapfrog today’s leaders by applying generative AI more intensively to their business’.

The hype is real, and so is the promise. But whether your business is rolling out an integrated GenAI strategy or leaving it up to your employees to ‘dabble’ with AI-generated content, your IT department and cybersecurity team will need to keep an eye on what’s happening in the shadows.